Drug development is a complex and time-consuming matter with multiple stages that include molecular design, screening, and various phases of testing. The key bottleneck here is chemical synthesis, a costly and inefficient process that represents the major obstacle in drug development. This blog post will delve into the recent advancements in methods inspired by natural language processing (NLP) that have been designed to accelerate this critical stage and thus drug discovery as a result. We will explore retrosynthesis, property prediction, but also the emergence of autonomous chemistry agents. Join us as we unravel the key insights that enabled researchers to harness NLP algorithms and apply them effectively in the realm of chemistry!

Transformers and NLP

The Transformer architecture has been a game-changer in the field of NLP. This neural network is now being used for all sorts of language modeling tasks like summarization or translation. This architecture, whose main components are self-attention layers, is excellent at learning to pick up how different parts of a sequence relate to each other. Basically, Transformers are great at connecting information within a tokenized sequence.

Now, what makes the Transformer powerful is how adaptable it is. After turning data into a sequence of tokens, it can be used for plenty of scientific tasks. For instance in bioinformatics, where DNA sequences or proteins are naturally represented as sequences of nucleotides and amino acids, AlphaFold2 has revolutionized the field. This system accurately predicts the 3D structure of proteins, solving a major problem in biochemistry and making it one of the hottest tools in biology right now [1, 8].

As we will discuss further, this transformative approach is now being applied to the field of organic chemistry, opening up exciting new possibilities for research and discovery.

Modeling the language of Organic Chemistry

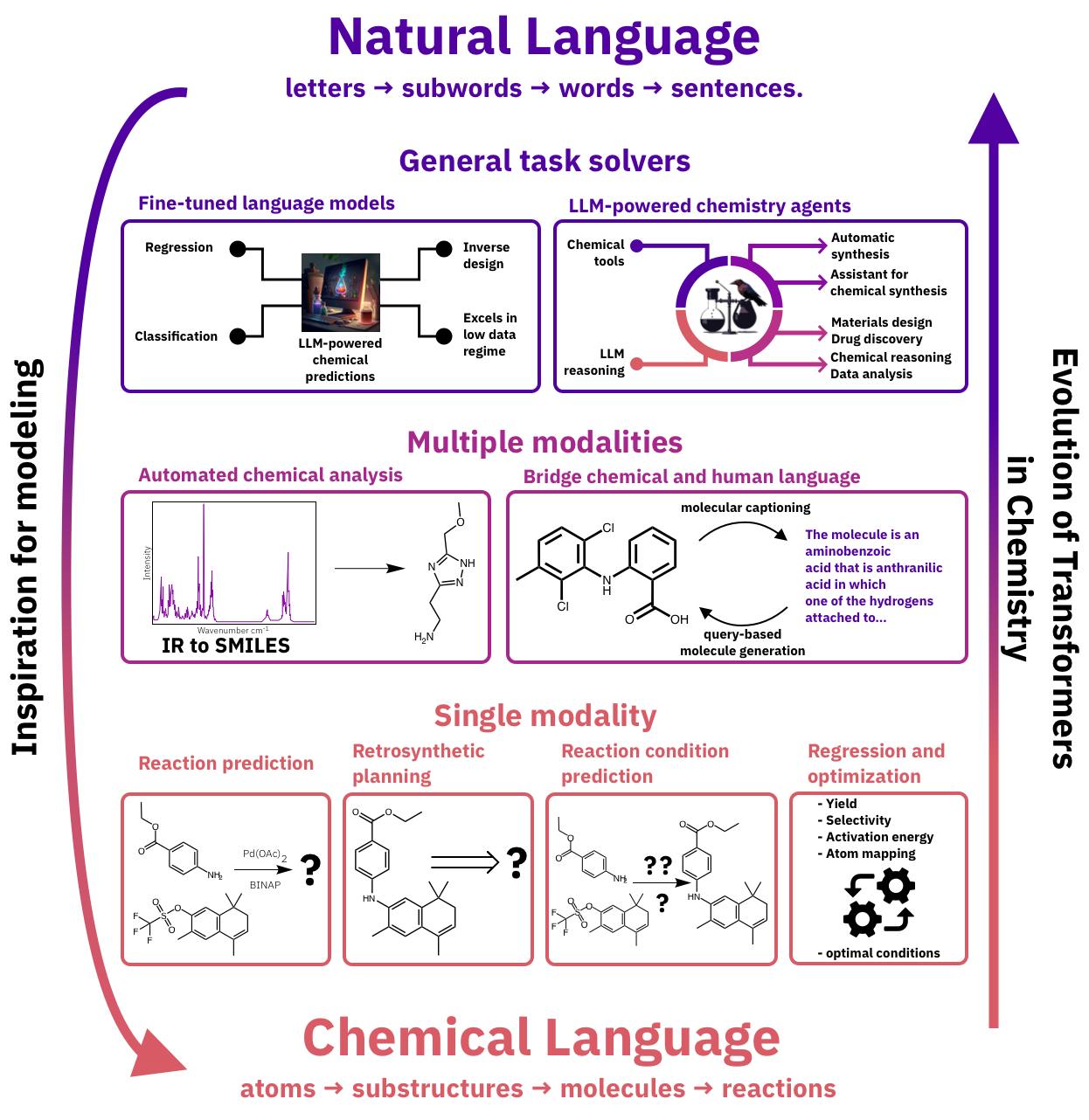

The evolution of NLP methods in chemistry follows a fascinating path composed of three phases (see Figure 1), beginning with analogies between chemical language and natural language. This comparison has enabled the creation of transformer models capable of reading and generating molecular structures, useful for tasks like retrosynthesis and regression.

As the field evolved, additional forms of data were added to models to unlock unprecedented possibilities. One group trained a model for elucidating molecules from IR spectra, while another trained a model for generating molecules from linguistic descriptions. Now, chemists are not just drawing inspiration from NLP methods, they're also using them as a direct resource for developing language-based applications in chemistry.

Phase 1: Molecular Transformers

Turning chemical tasks into text sequences, along with the rise of open datasets and benchmarks, allowed chemists to train Transformers that take molecules as input, and return molecules as outputs; that is, Molecular Transformers. This marked a revolution in the field, that started with tackling key chemical challenges that are crucial to drug development, like predicting reaction products and retrosynthetic planning.

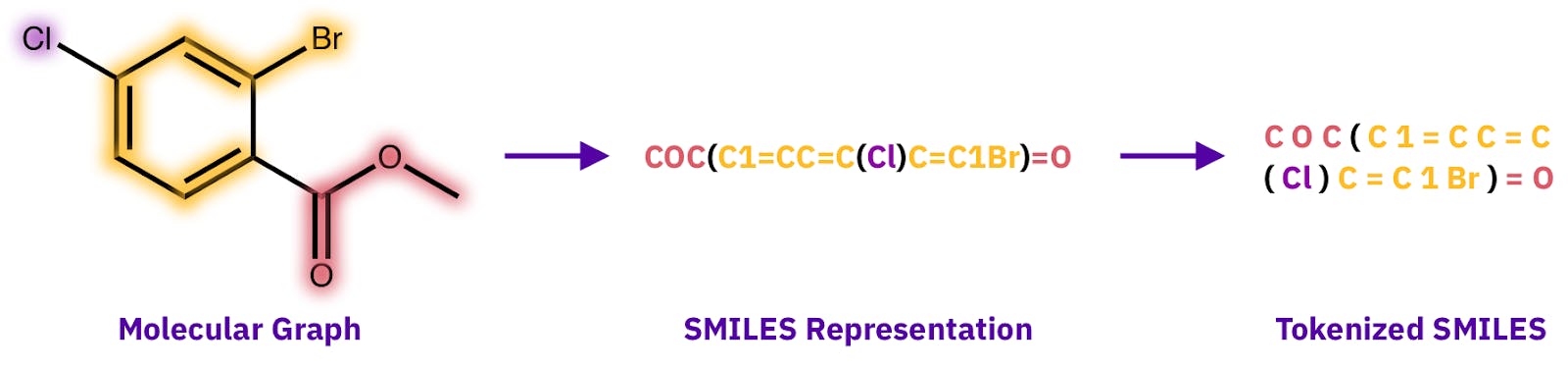

However, adapting these methods wasn't straightforward because of the diverse structural motifs in organic molecules which makes it hard to view them as sequences. A way around this was using linear string representations of molecules and reactions, like SMILES and SELFIES, along with field-specific tokenization methods [10].

These insights set the stage for the first uses of Transformers in chemistry. They led to powerful retrosynthesis systems [11, 13], and new ways to explore the chemical space [9, 12].

Phase 2: Articulating chemical language and other modalities

Although powerful, Molecular Transformers ignore a lot of information that goes beyond molecular representations. Chemical reactions also involve a bunch of other types of data, or modalities. These can include spectra from analytical techniques and linguistic descriptions that give us details and explanations of molecular processes.

Researchers have explored these connections by training Transformers on specific types and combinations of data. For example, they have used these models for elucidating molecules from their IR [2] and NMR [3] spectra. Other key applications involve predicting experimental steps from reaction SMILES [14], and molecular captioning, where the aim is to describe a molecule using words.

These applications mark the second step in the evolution of NLP methods in chemistry (see Figure 1 middle). They gradually bring in more modalities and forms of language, but they're not yet quite as versatile as the latest NLP models.

Phase 3: Advanced applications: Towards general chemistry-language models

Recent breakthroughs in Large Language Models (LLMs) have taken the connection between chemistry and language to a whole new level. Scaling models with massive datasets and compute, along with paradigms like fine-tuning and in-context learning have made it possible to model chemistry directly in natural language, which is a more flexible way of describing the scientific process and its results.

For example, fine-tuned LLMs have shown pretty impressive capabilities, like predicting molecular properties in zero- and few-shot settings [7]. These models are sometimes even better than task-specific, expert-designed models, especially in the low-data regime as is usually the case in chemistry. The reason they're so good is probably that they learn from large text corpora, which lets them transfer knowledge to new tasks more efficiently.

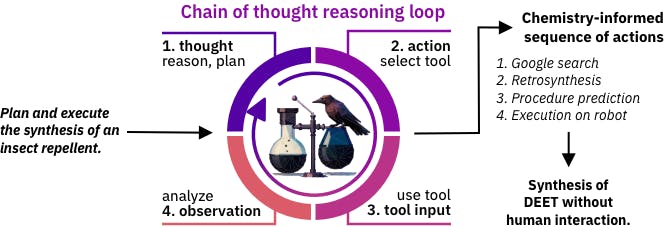

LLMs have also been used as agents, taking advantage of their reasoning and tool-using capabilities. These agents can provide a text-modulated interface for complex chemical tasks that require planning and analysis [4], and do so by using multiple tools in a goal-oriented way. For example, ChemCrow [5] was given the task of synthesizing an insect repellent. With access to a robotic chemistry lab among its tools, ChemCrow identified a target molecule with the desired function, planned the synthesis, and figured out the details of the synthesis process within the robotic facility. The result was the successful production of DEET, a widely-used insect repellent, marking a big milestone in the use of LLMs in chemistry.

This last step marks the third phase, and brings us full circle: chemistry can now be modeled just like any other language! We're back to the methods that inspired the original applications, letting researchers take advantage of more of the breakthroughs that have happened over the years in NLP.

We've got an exciting future ahead of us! We look forward to the novel discoveries that these techniques will facilitate in chemistry and drug discovery. To learn more, please check out our original preprint [6].

References